Overview

Beta

This Kubeflow component has beta status. See the Kubeflow versioning policies. The Kubeflow team is interested in your feedbackabout the usability of the feature.What is Katib ?

Katib is a Kubernetes-native project for automated machine learning (AutoML). Katib supports hyperparameter tuning, early stopping and neural architecture search (NAS). Learn more about AutoML at fast.ai, Google Cloud, Microsoft Azure or Amazon SageMaker.

Katib is the project which is agnostic to machine learning (ML) frameworks. It can tune hyperparameters of applications written in any language of the users’ choice and natively supports many ML frameworks, such as TensorFlow, MXNet, PyTorch, XGBoost, and others.

Katib supports a lot of various AutoML algorithms, such as Bayesian optimization, Tree of Parzen Estimators, Random Search, Covariance Matrix Adaptation Evolution Strategy, Hyperband, Efficient Neural Architecture Search, Differentiable Architecture Search and many more. Additional algorithm support is coming soon.

The Katib project is open source. The developer guide is a good starting point for developers who want to contribute to the project.

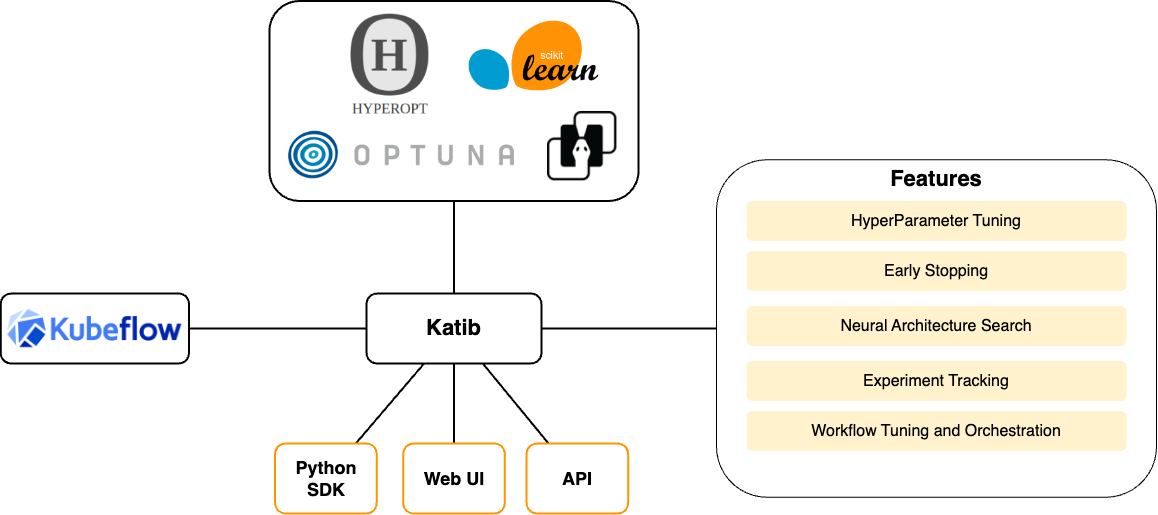

Architecture

This diagram shows the major features of Katib and supported optimization frameworks to perform various AutoML algorithms.

This diagram shows how Katib performs Hyperparameter tuning:

First of all, user need to write ML training code which will be evaluated on every Katib Trial with different Hyperparameters. Then, using Katib Python SDK user should set objective, search space, search algorithm, Trial resources, and create the Katib Experiment.

Follow the quickstart guide to create your first Katib Experiment.

Katib implements the following Custom Resource Definitions (CRDs) to tune Hyperparameters:

Experiment

An Experiment is a single tuning run, also called an optimization run.

You specify configuration settings to define the Experiment. The following are the main configurations:

Objective: What you want to optimize. This is the objective metric, also called the target variable. A common metric is the model’s accuracy in the validation pass of the training job (validation-accuracy). You also specify whether you want the hyperparameter tuning job to maximize or minimize the metric.

Search space: The set of all possible hyperparameter values that the hyperparameter tuning job should consider for optimization, and the constraints for each hyperparameter. Other names for search space include feasible set and solution space. For example, you may provide the names of the hyperparameters that you want to optimize. For each hyperparameter, you may provide a minimum and maximum value or a list of allowable values.

Search algorithm: The algorithm to use when searching for the optimal hyperparameter values.

Katib Experiment is defined as a Kubernetes CRD .

For details of how to define your Experiment, follow the guide to running an experiment.

Suggestion

A Suggestion is a set of hyperparameter values that the hyperparameter tuning process has proposed. Katib creates a Trial to evaluate the suggested set of values.

Katib Suggestion is defined as a Kubernetes CRD .

Trial

A Trial is one iteration of the hyperparameter tuning process. A Trial corresponds to one worker job instance with a list of parameter assignments. The list of parameter assignments corresponds to a Suggestion.

Each Experiment runs several Trials. The Experiment runs the Trials until it reaches either the objective or the configured maximum number of Trials.

Katib trial is defined as a Kubernetes CRD .

Worker job

The worker job is the process that runs to evaluate a Trial and calculate its objective value.

The worker job can be any type of Kubernetes resource or Kubernetes CRD. Follow the Trial template guide to check how to support your own Kubernetes resource in Katib.

Katib has these CRD examples in upstream:

By offering the above worker job types, Katib supports multiple ML frameworks.

Hyperparameters and hyperparameter tuning

Hyperparameters are the variables that control the model training process. They include:

- The learning rate.

- The number of layers in a neural network.

- The number of nodes in each layer.

Hyperparameter values are not learned. In other words, in contrast to the node weights and other training parameters, the model training process does not adjust the hyperparameter values.

Hyperparameter tuning is the process of optimizing the hyperparameter values to maximize the predictive accuracy of the model. If you don’t use Katib or a similar system for hyperparameter tuning, you need to run many training jobs yourself, manually adjusting the hyperparameters to find the optimal values.

Automated hyperparameter tuning works by optimizing a target variable, also called the objective metric, that you specify in the configuration for the hyperparameter tuning job. A common metric is the model’s accuracy in the validation pass of the training job (validation-accuracy). You also specify whether you want the hyperparameter tuning job to maximize or minimize the metric.

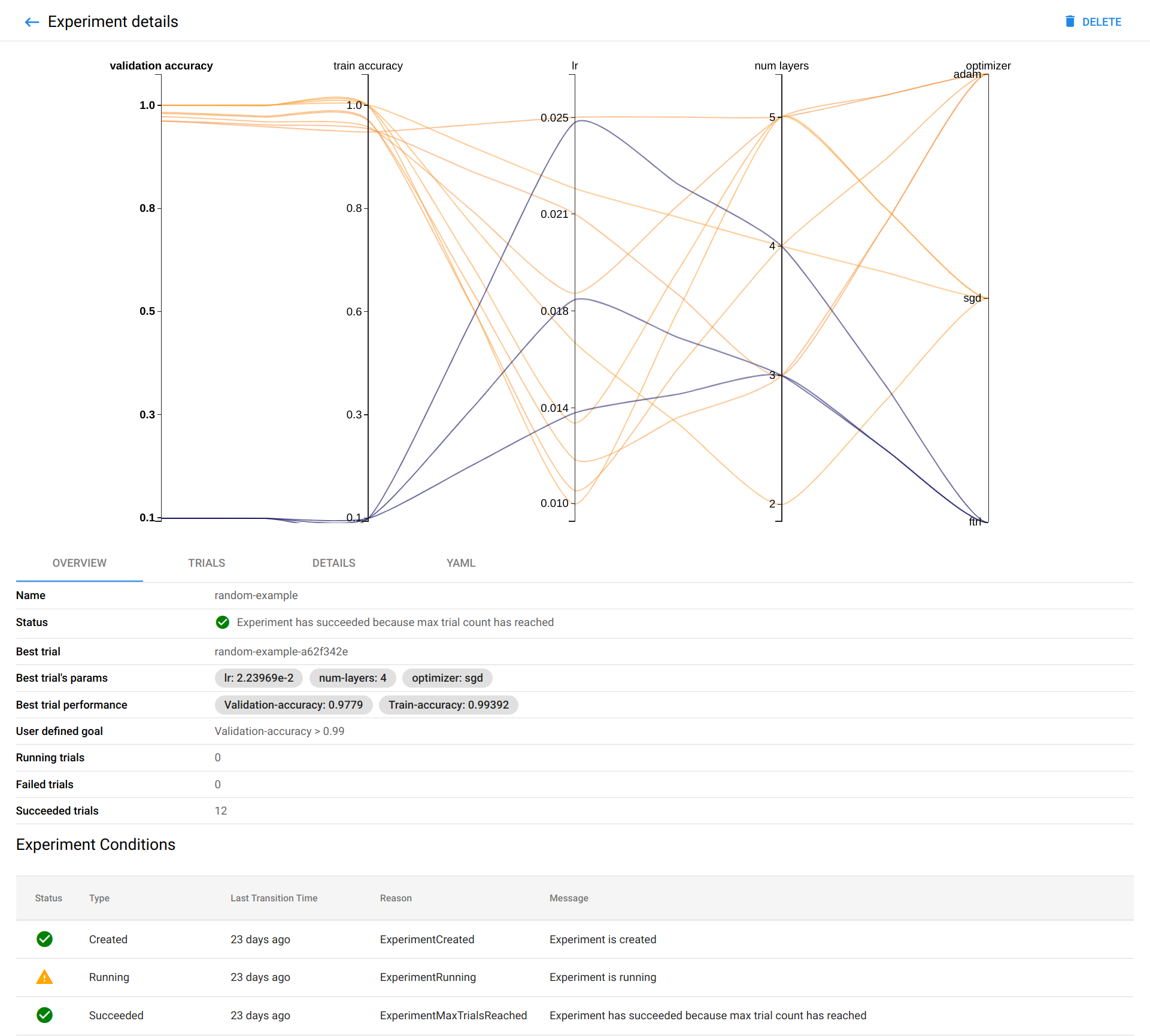

For example, the following graph from Katib shows the level of validation accuracy for various combinations of hyperparameter values (the learning rate, the number of layers, and the optimizer):

(To run the example that produced this graph, follow the getting-started guide.)

Katib runs several training jobs (known as Trials) within each hyperparameter tuning job (Experiment). Each Trial tests a different set of hyperparameter configurations. At the end of the Experiment, Katib outputs the optimized values for the hyperparameters.

You can improve your hyperparameter tuning Experiments by using early stopping techniques. Follow the early stopping guide for the details.

Neural architecture search

Alpha version

NAS is currently in alpha with limited support. The Kubeflow team is interested in any feedback you may have, in particular with regards to usability of the feature. You can log issues and comments in the Katib issue tracker.In addition to hyperparameter tuning, Katib offers a neural architecture search feature. You can use the NAS to design your artificial neural network, with a goal of maximizing the predictive accuracy and performance of your model.

NAS is closely related to hyperparameter tuning. Both are subsets of AutoML. While hyperparameter tuning optimizes the model’s hyperparameters, a NAS system optimizes the model’s structure, node weights and hyperparameters.

NAS technology in general uses various techniques to find the optimal neural network design.

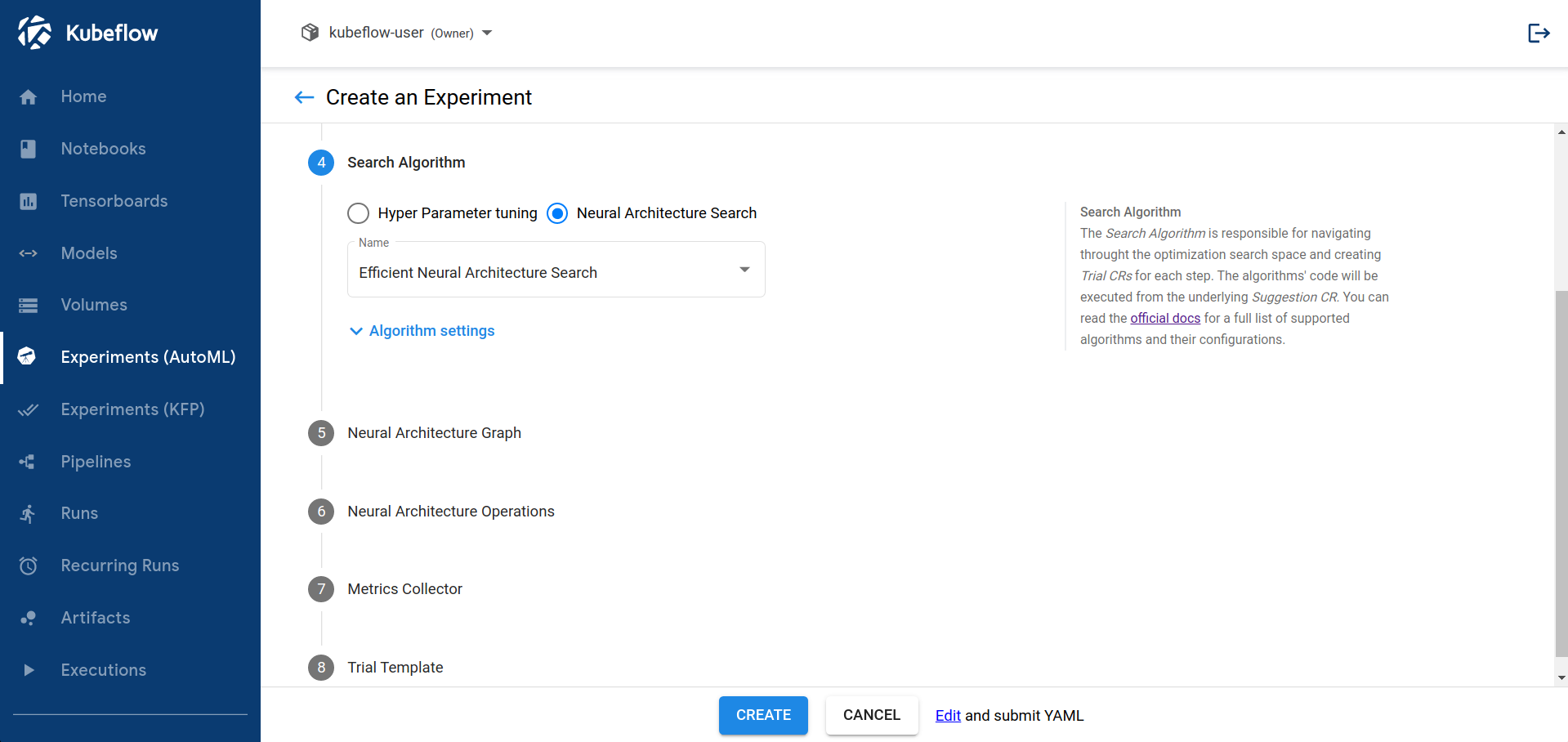

You can submit Katib jobs from the command line or from the UI. (Learn more about the Katib interfaces later on this page.) The following screenshot shows part of the form for submitting a NAS job from the Katib UI:

Katib interfaces

You can use the following interfaces to interact with Katib:

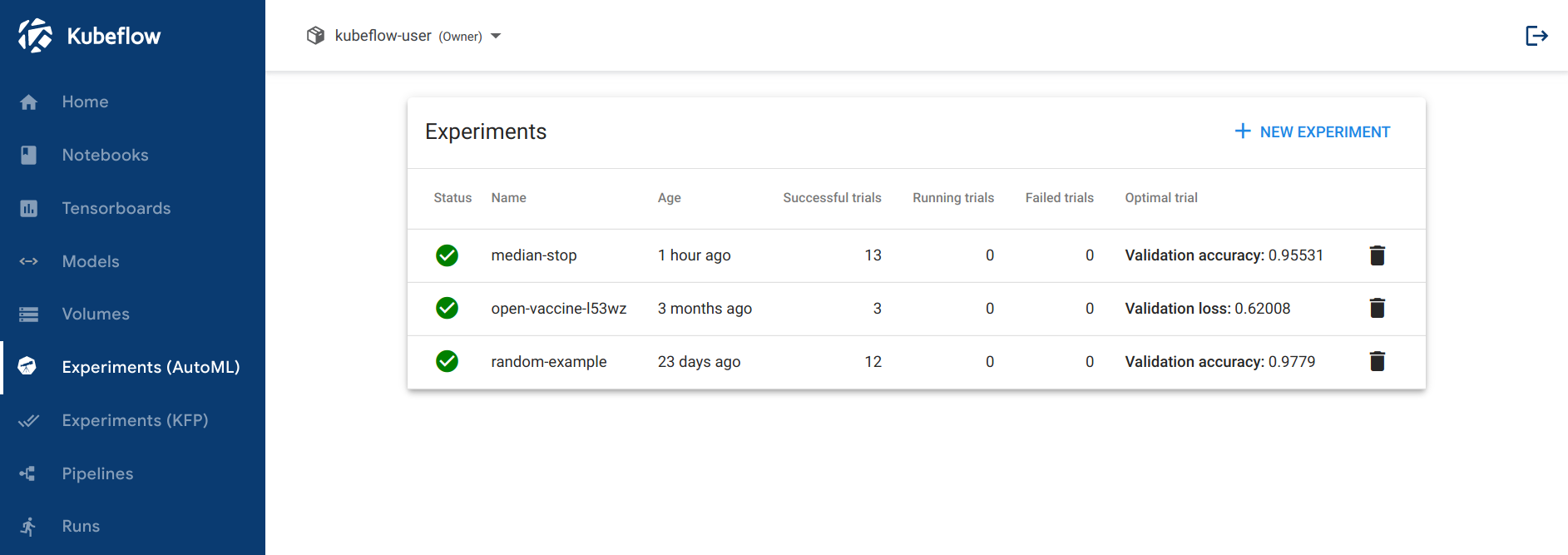

A web UI that you can use to submit Experiments and to monitor your results. Check the getting-started guide for information on how to access the UI. The Katib home page within Kubeflow looks like this:

A gRPC API. Check the API reference on GitHub.

Command-line interfaces (CLIs):

- The Kubernetes CLI, kubectl, is useful for running commands against your Kubeflow cluster. Learn about kubectl in the Kubernetes documentation.

Katib Python SDK. Check the Katib Python SDK documentation on GitHub.

Next steps

Follow the getting-started guide to set up Katib and run some hyperparameter tuning examples.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.